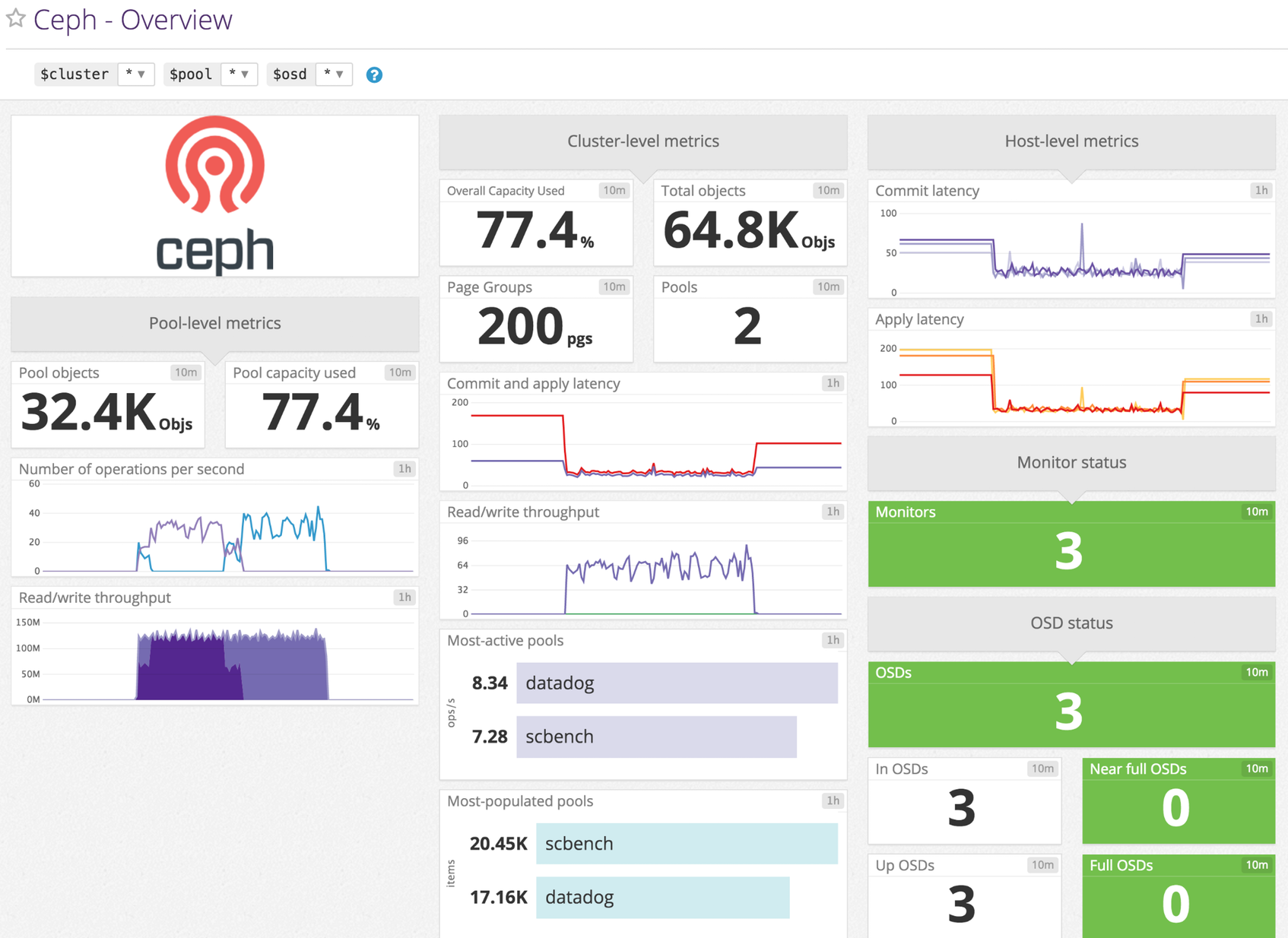

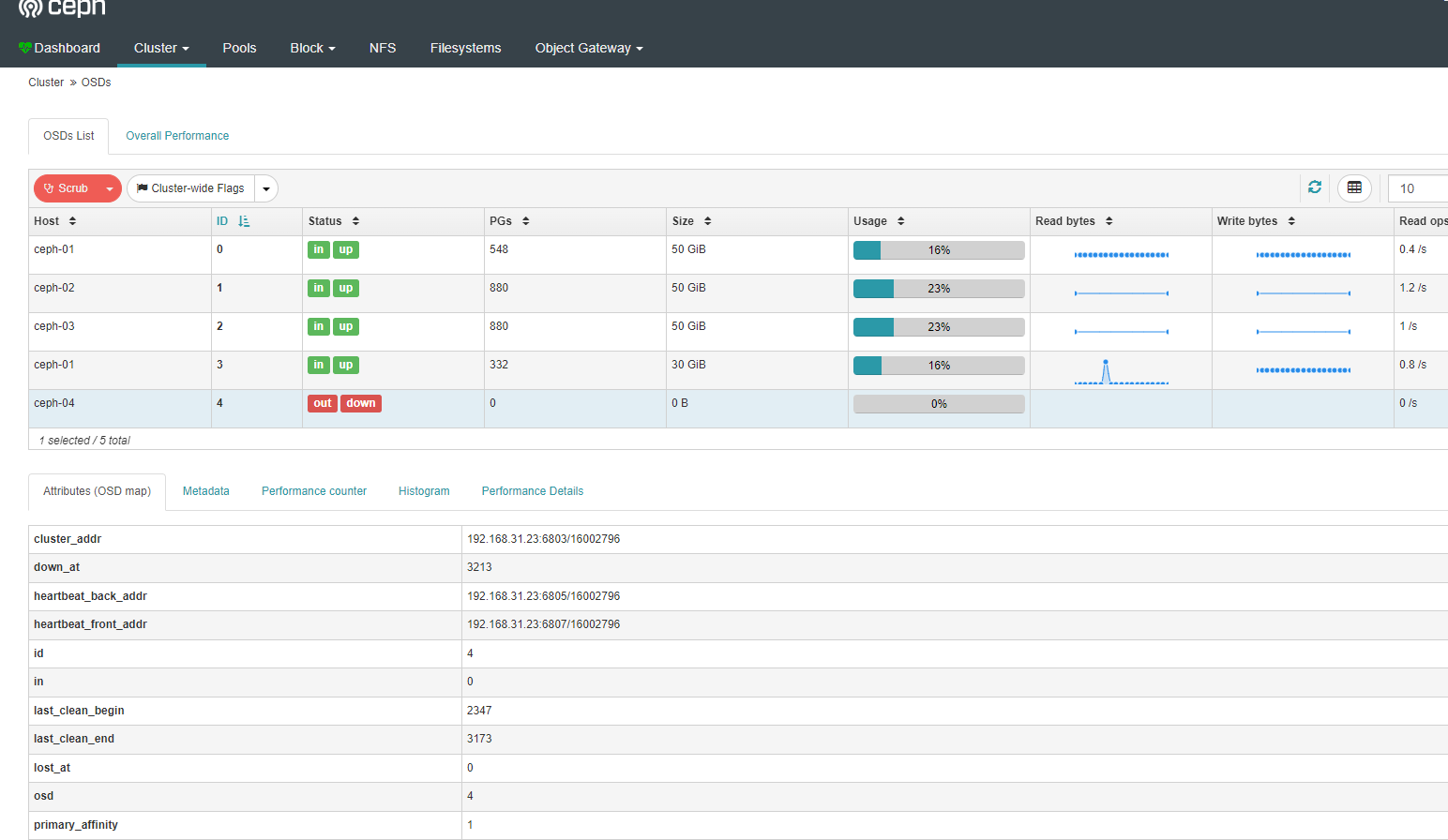

Feature #24573: mgr/dashboard: Provide more "native" dashboard widgets to display live performance data - Dashboard - Ceph

Feature #24573: mgr/dashboard: Provide more "native" dashboard widgets to display live performance data - Dashboard - Ceph

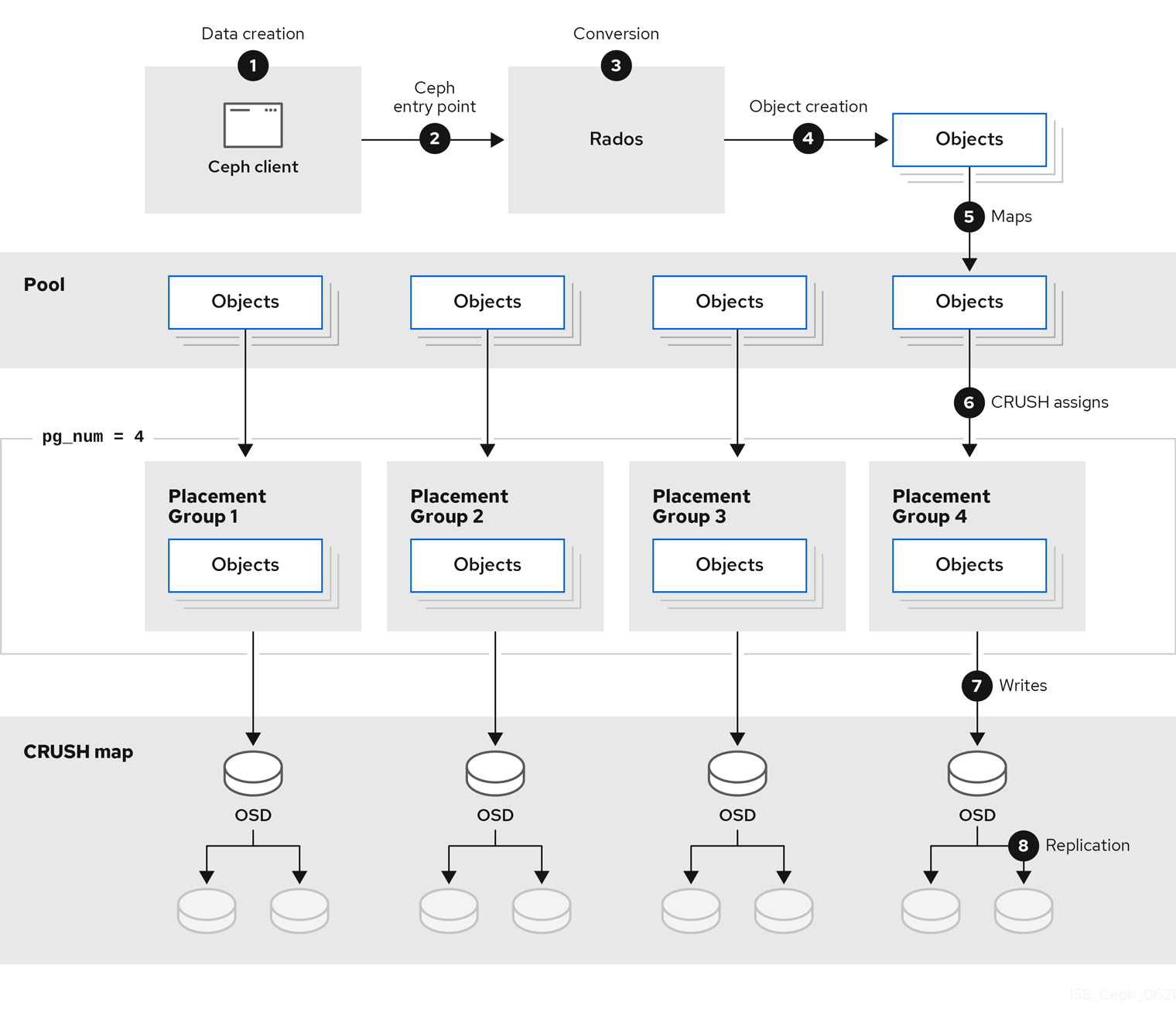

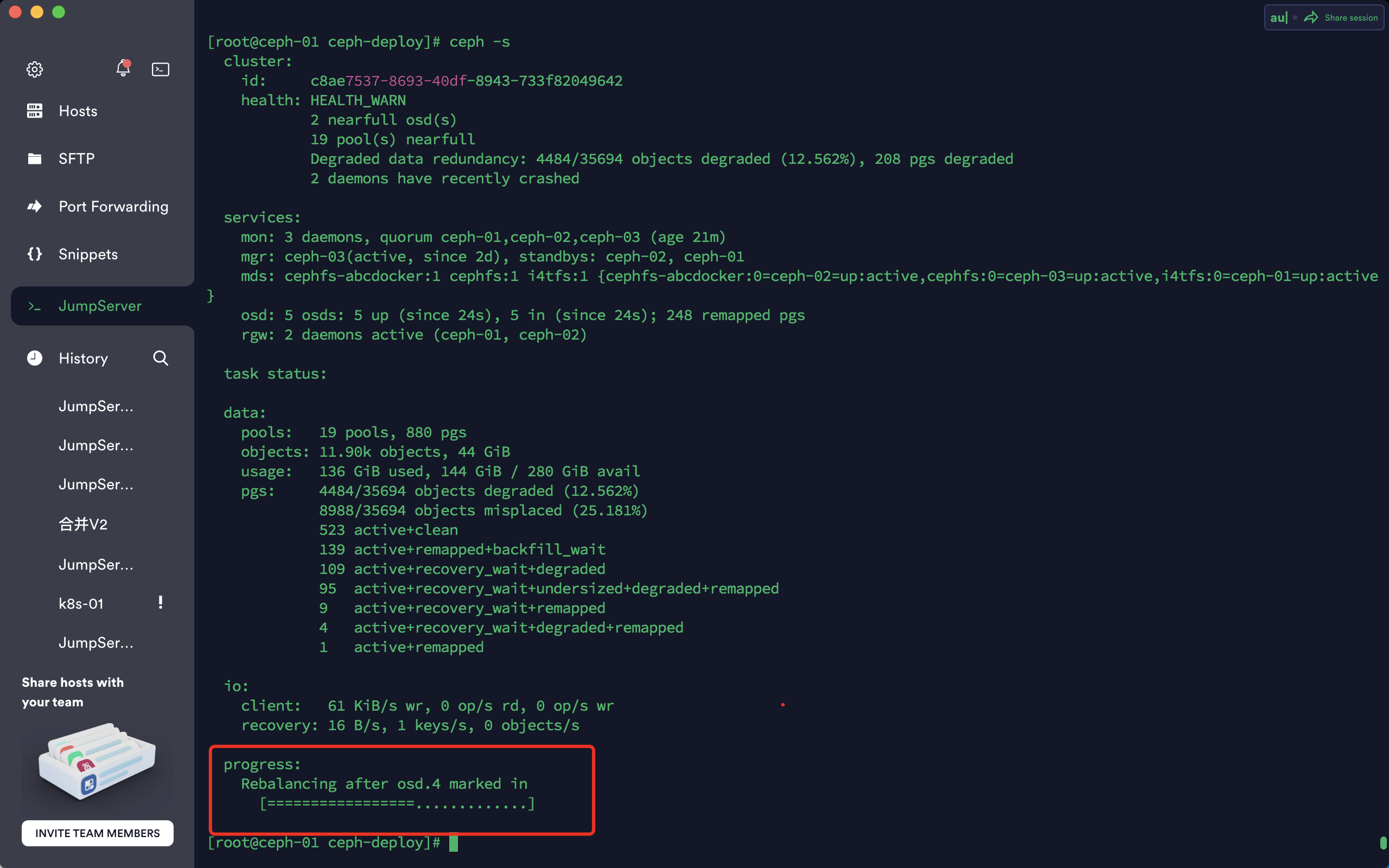

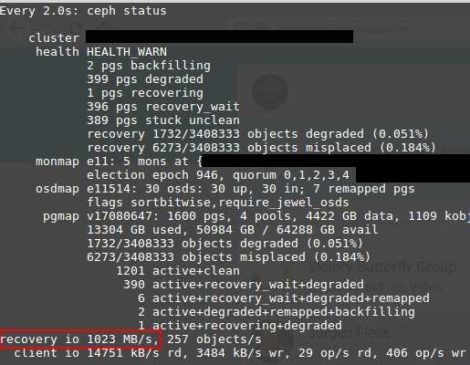

Why ceph cluster is `HEALTH_OK` even though some pgs remapped and objects misplaced? · rook rook · Discussion #10753 · GitHub

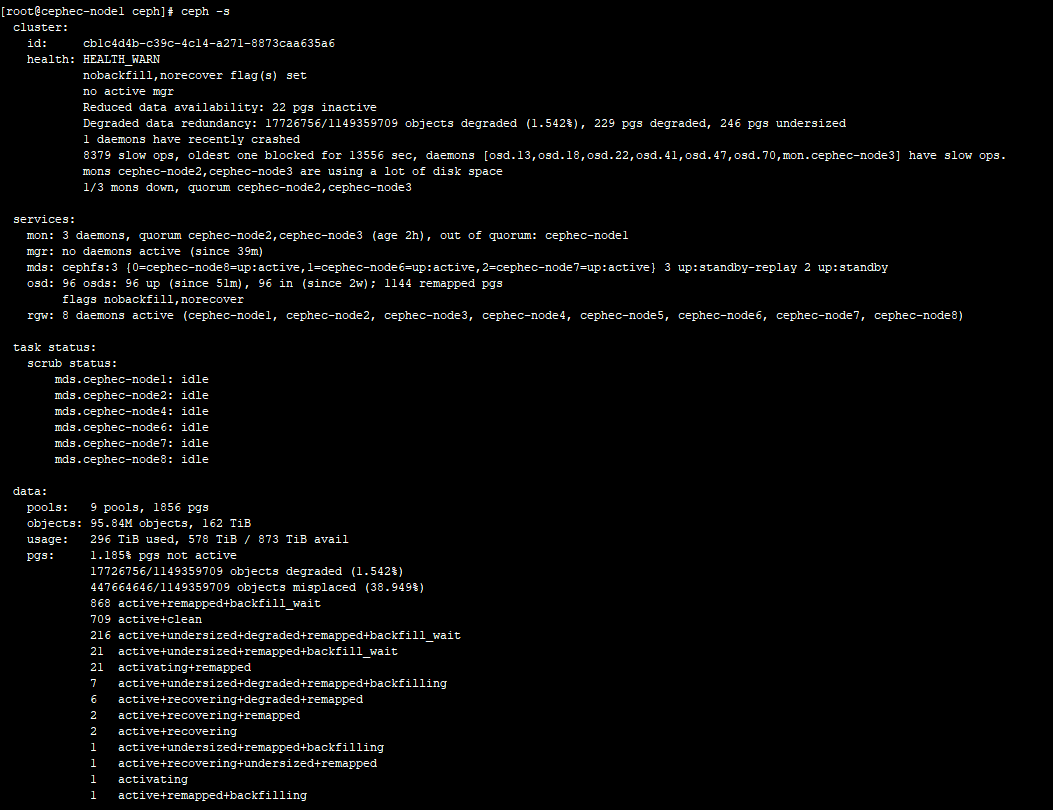

EC pool constantly backfilling misplaced objects since v1.12.8 upgrade · Issue #13340 · rook/rook · GitHub